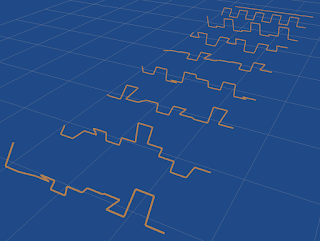

We can also look for a mean set of points. This has continuous rather than discrete symmetries. The starting point is the 1D case: what is the mean set of repeating point locations? It depends on how many points are in the sequence, so here is the answer for the first 10 numbers of points:

The images on the left represent the sequence as the points in a circle (rendered as radial spokes).

As you can see, the result is fairly trivial, the sequence seems to be symmetric, with a single largest gap, and progressively smaller gaps on either side of it.

If we include reflection of the sequence in the equivalence class, the results appear to be exactly the same.

2D

We could explore the 2D case in a toroidal universe as with the previous mean textures post, but this doesn't easily allow a continuous rotational symmetry. Instead, let's look at a spherical universe, so find the mean set of n random points on a sphere.

To do this, I need a way to find the least squares orientation between two sets of points on a sphere. To avoid the uncertainties of approximate methods (such as Iterative Closest Point etc) I compare all permutations of the points and run an absolute orientation function on each permutation (Eigen::umeyama). The R which has the smallest sum of squares difference is chosen, and applied to one set of points before averaging them and renormalising.

Unfortunately, this permutations approach is intractable for larger numbers of points, so I show here only up to 5 points. Giving just a hint of the pattern for mean points on a sphere.

2 points: (right angle)

3 points:

4 points:

-0.394284 -0.910857 -0.121984, -0.680957 0.712358 -0.169835, -0.983752 -0.0842168 0.158555, 0.184711 0.622359 -0.7606265 points:

-0.295731 0.738672 -0.605728, 0.690733 0.0875932 -0.717785, -0.97845 0.0669067 -0.195341, -0.301121 -0.657821 -0.690361, -0.651082 -0.174766 0.738613

6 points:

0.129365 -0.627223 0.768021, -0.434599 -0.871754 -0.226204, 0.367076 -0.768696 -0.523795, -0.841728 -0.113547 0.527827, 0.769293 0.153797 -0.620109, -0.778213 0.13924 -0.61237

These at least show that the mean set of points on a sphere does not follow a trivial pattern. The points aren't in a plane or even within a radial cone.